The Economics of AI Agents: A High-Stakes Game

Why Inference-Time Compute Costs are Key to the Future of Smaller Players

AI Agents have been the much talked about trend in addition to LLMs. The latest AI sensation being Manus, a very capable Agent. There are many who are evaluating Manus itself and I am not going to add to the many reviews. Instead, it got me thinking about the economics of building an AI Agent.

An AI agent is a program designed to perform specific tasks autonomously, using artificial intelligence to make decisions and interact with its environment. AI agents can range from simple chatbots to complex systems that learn from data and adapt to new situations, often simulating human-like intelligence in their operations. Manus, OpenAI, Google Agents have shown they can be used to book travel, create itineraries etc.

An AI Agent uses one or more AI models (public LLMs and/or private models depending on the task) to perform a specific task. The Agent mostly runs an inference job on the model that has already been trained/fine tuned with the necessary data set. Larger tasks are divided into smaller jobs that one or more AI Agents perform and the outputs put together to complete the task.

This means AI Agents are mostly running inference jobs and use “inference-time compute”. So to understand the economics of AI Agents, we need to understand the economics of inference-time compute a.k.a test-time compute.

What it takes to run an AI Agent

We need the following parameters to understand what it takes to run an AI Agent

Time needed to complete a job

Infrastructure needed to run a job

Number of jobs that the AI Agent needs to support

Let us consider an example scenario of running a Deep Research job by Perplexity or Manus or similar. (These are simplified assumptions and estimates and real-world scenarios might have additional variables).

Assumptions

1000 daily active users and each user runs 10 searches (jobs) per day on an average

Each query takes 5 mins (reasoning/Chain of Thought) to complete an average job

Peak usage (queries) is 10% of daily queries in one hour

NVIDIA A100 GPUs for inference is assumed for illustration purposes here as it offers performance and cost-effectiveness. Choosing L4 or H100 or some other vendor may offer different performance characteristics or cost structures

Calculations

Total daily queries: 1000 users × 10 queries/user = 10,000 queries/day

Peak hourly queries: 10,000 × 10% = 1,000 queries/hour

Concurrent queries: (1,000 queries/hour × 5 minutes/query) / 60 minutes = ~83 concurrent queries

GPU Requirements

Assuming each A100 can handle 10 concurrent queries - a simplified estimate. (actual performance depends on complexity of the AI model, the size of the input data, batching optimizations and the specific requirements of each query)

Required GPUs: 83 / 10 ≈ 9 A100 GPUs

Inference Costs

Average A100 GPU Cost: $3 per hour per A100 ($72/day or $2,160/month or $25,920/year) for 24/7 operation

Cost for 9 A100 GPUs: $27/hour or $648/day or $19,440/month or $233,280/year for 24/7 operation

This does not include other infra costs needed to support the query application

"$3/hour" price point for A100 assumes discounts or reserved instances from cloud providers (AWS/GCP), as list prices are closer to ~$4/hour

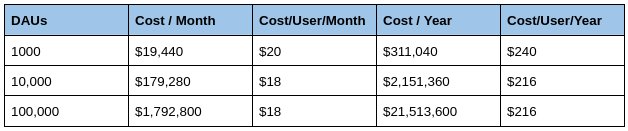

Scaling an AI Agent

Now lets understand what it takes to scale (a simple linear scaling example is shown here)

10x Scale (10,000 Daily Active Users)

Concurrent queries: 83 × 10 = 830

Required GPUs: 830 / 10 ≈ 83 A100 GPUs

100x Scale (100,000 Daily Active Users)

Concurrent queries: 83 × 100 = 8,300

Required GPUs: 8,300 / 10 ≈ 830 A100 GPUs

1000x Scale (1,000,000 Daily Active Users)

Concurrent queries: 83 × 1000 = 83,000

Required GPUs: 83,000 / 10 ≈ 8,300 A100 GPUs

Additional costs

In addition, the following costs need to be accounted for.

Infrastructure costs

Data storage - High-capacity NVMe drives can cost ($0.08 - $0.125 per GB per month)

Networking - High-performance networking equipment and bandwidth costs, including data egress fees (up to $0.09 per GB) from cloud providers

Cloud infrastructure - Annual infrastructure costs for hosting and training the AI model

Software costs

AI Agent development costs - this depends on the complexity of the agent

Software licensing fees - for AI frameworks, databases, data analytics platforms, load balancers, api servers and management software

Data costs - cost of data to train the AI models

Security and Compliance - expenses for firewalls, encryption, and intrusion detection systems

These costs are hard to quantify as they can range from open source to managed solutions, but the enterprises are very familiar with them. It is sufficient to say this increases the Cost/User further.

The inference-time compute costs constitute a significant part of the overall costs, and impacts the pricing and margins of AI Agents.

How to reduce costs

Cost reductions are possible, but require solving complex distributed computing problems. Some possibilities include

GPU Utilization - improving GPU utilization with scale can reduce the inference-time compute footprint needed

Model Optimization - use quantized models for smaller footprint and efficiency without compromising on the accuracy for most important metrics. For example, 8-bit models can achieve 99.9% accuracy recovery compared to FP32 while reducing model size to 25%

Other Infrastructure Optimizations - better memory usage, caching techniques

Auto-scaling - auto scaling capabilities with Kubernetes integration to reduce average GPU footprint

Newer inference chipsets - companies are building specialized chipsets for inference that could potentially reduce compute and energy costs. It is still unknown what kind of cost reductions are possible at scale, but certainly presents a huge opportunity for new age silicon vendors

Perplexity setting the pricing and impact for AI Agent companies…

Companies such as Perplexity have already set the pricing for General Purpose Deep Research. Perplexity offers a free tier as well as $20/month for pro-tier and enterprise version for $40/month.

From the above cost computation, it appears these prices are below cost, however Perplexity is able to subsidize the Agent cost as it has Ad revenues in addition to Subscription revenues. It's likely losing money on the searches and AI Agents (for now) for user acquisition, but has economies of scale. In addition, with Comet browser, Perplexity will have a more sticky business as well.

However, this makes the case harder for other companies building AI Agents.

They need to offer value through custom AI Agents that general purpose AI Agents are unable to offer

They need to solve the inference-time compute cost problem under much harsher constraints

Switching costs for users are not high, so customer retention is tougher

In addition, regulatory compliance costs could impact the AI Agent economics as well

AI Agent Market and key takeaways…

Nvidia's dominance in the GPU market, with its estimated 70-95% market share for AI chips and impressive gross margins of around 78%, has been a driving force in the AI boom. However, the sustainability of the AI Agent market hinges on a more balanced ecosystem. The long-term viability of the AI industry requires a shift in profit distribution from hardware manufacturers to software companies and AI Agent developers.

The current landscape presents significant challenges for startups and smaller players, who struggle to compete with the economies of scale and resources of tech giants. The success of the AI Agent market ultimately depends on creating an environment (a level playing field) where smaller players can innovate and compete effectively.