OpenAI: The Empire That Wants It All

From models to chips, clouds, agents, and social media — OpenAI is trying to own the entire AI stack. If it succeeds, it rewrites the playbook. If it fails, it could shake the AI economy itself

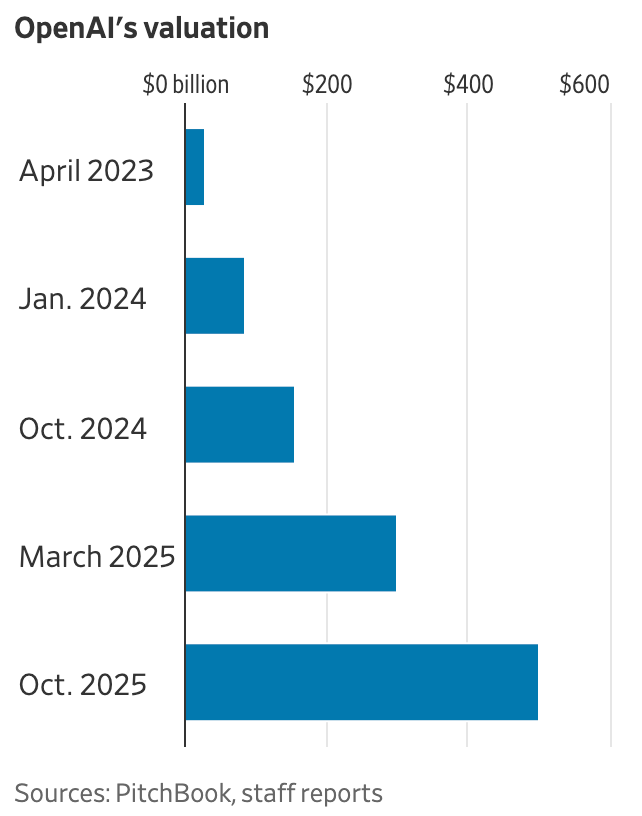

OpenAI was in the news this week for reaching a valuation of $500 billion, the highest for a private company ever. The scale of this, while not surprising anymore given everything going on in AI, is still astounding. That got me thinking about it.

OpenAI began in 2015 as a nonprofit AI research lab with a mission to build artificial general intelligence (AGI). It started off building foundational AI models, that include GPT-3 (2020), DALL·E (2021), ChatGPT (2022), GPT-4 (2023), and Sora for video generation (2024). In 2019, OpenAI transitioned to a “capped-profit” model securing major investments, most notably from Microsoft.

The $500 billion valuation of OpenAI is due to its major infrastructure deals (e.g. Oracle Cloud partnership), based on some aggressive revenue goals and the expected wide adoption of AI technology in general.

Some stats to get anchored on

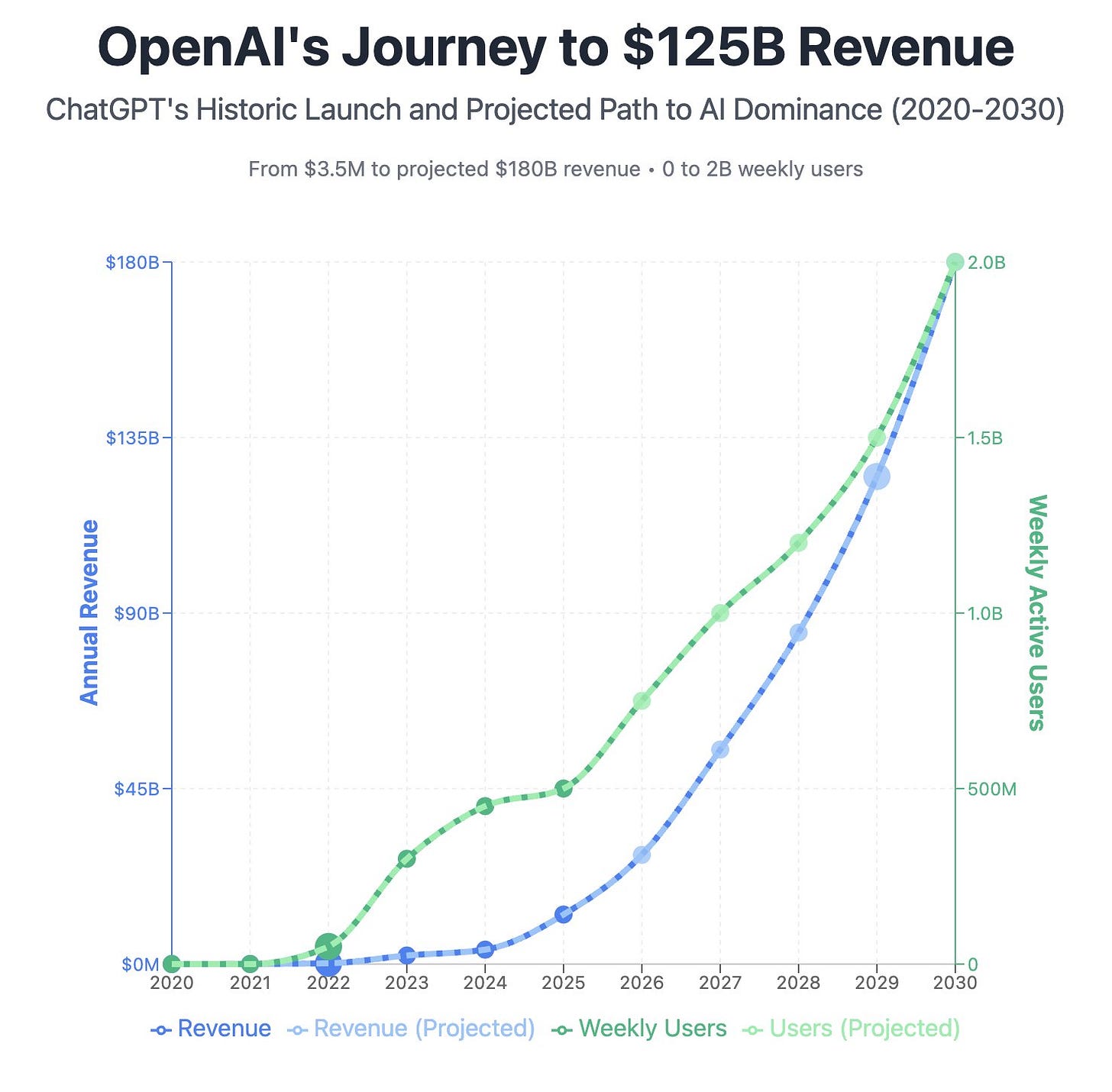

In 2024, OpenAI’s reported revenue was ~$3.7 billion

It is reported to have it $4.3 billion in 1H 2025, with $8.5 billion in expense (i.e. $4.7 billion in loss in 1H), with a forecast of hitting ~$12.7 billion in revenue in 2025

Their projection is ~$125 billion in revenue by 2029 (and ~$174 billion by 2030)

As part of the Oracle Cloud deal, OpenAI has an annual obligation of $60 billion/year, which I have covered in in my article The $300 billion bet

Getting from ~$12.7 billion (2025) to ~$125 billion by 2029 means ~10× growth over four years (CAGR ~100%) or more. That’s extremely aggressive

OpenAI had originally forecasted profitability by 2029/2030, which as of now seems unchanged. While growing 10x over 4 years is aggressive enough, making a profit with such aggressive growth defies precedence.

How is OpenAI going about it?

OpenAI has expanded far beyond its original mission of developing core foundational AI models, embracing a broad suite of businesses and initiatives across consulting, enterprise software, agent platforms, and more.

Foundational model development

OpenAI started as a company building foundational models. It continues to be the bread and butter for the company. The models are the basis for enabling value for all the products and services it develops on top of it to monetize. So it needs to continue to roll out new models to keep pace with Meta, Google etc. in its quest for AGI. This continues to be a significant driver for infrastructure costs for OpenAI (and similar companies).

Application and SaaS Offerings, AI Agents and Autonomous Platforms

Foundational models are increasingly becoming commoditized. Recognizing this, OpenAI is aggressively moving into SaaS, launching enterprise applications that compete directly with major software platforms.

Examples include the Inbound Sales Assistant and GTM Assistant, with applications for sales enablement, marketing, customer support, analytics, and finance. These AI-powered apps are increasingly seen as competitors to Salesforce, HubSpot, and DocuSign.

API Layer, Agent SDKs

OpenAI is actively building several wrappers and orchestration layers on top of its core models, aiming to make API integration, tool-use, and multi-agent workflows easier and more powerful for developers.

The Responses API and OpenAI’s Connectors are official wrappers for third-party services that let models read and interact with external structured data

The Agents SDK is a orchestration framework for building, deploying, and managing AI-powered agents

Conversations API is a wrapper for managing long-running, multi-message interactions

Social Media and Consumer Platforms

OpenAI is reportedly making a major move into the social media space with a focus on platforms that rival TikTok, Instagram, X (formerly Twitter), and LinkedIn.

Sora 2 is designed as a social feed for sharing AI-generated short-form videos, mimicking features found on TikTok and Instagram Reels

OpenAI is rumored to be developing an internal prototype for a broader social media network, potentially combining features seen in X and LinkedIn

Hardware Ambitions

OpenAI’s hardware ambitions focus heavily on reducing dependency on external GPU suppliers by designing its own custom AI accelerator chips.

$10 billion+ Broadcom partnership to co-design custom AI inference chips produced by TSMC, expected to ship starting 2026

Continued multi-billion-dollar partnership with Nvidia for high-performance AI GPUs

Acquisition of Jony Ive’s hardware startup io Products for designing AI-oriented devices/wearables, “AI companions” etc.

Partnerships with Samsung Heavy Industries and Samsung C&T to develop floating data centers to address land scarcity and cooling costs

Consulting and Enterprise Transformations

OpenAI has launched a consulting division modeled after McKinsey and Palantir, targeting $10M+ enterprise deals, and partnerships with companies like Bain. OpenAI’s value proposition is speed and integration with solutions that are shipped in weeks, bypassing traditional consulting models.

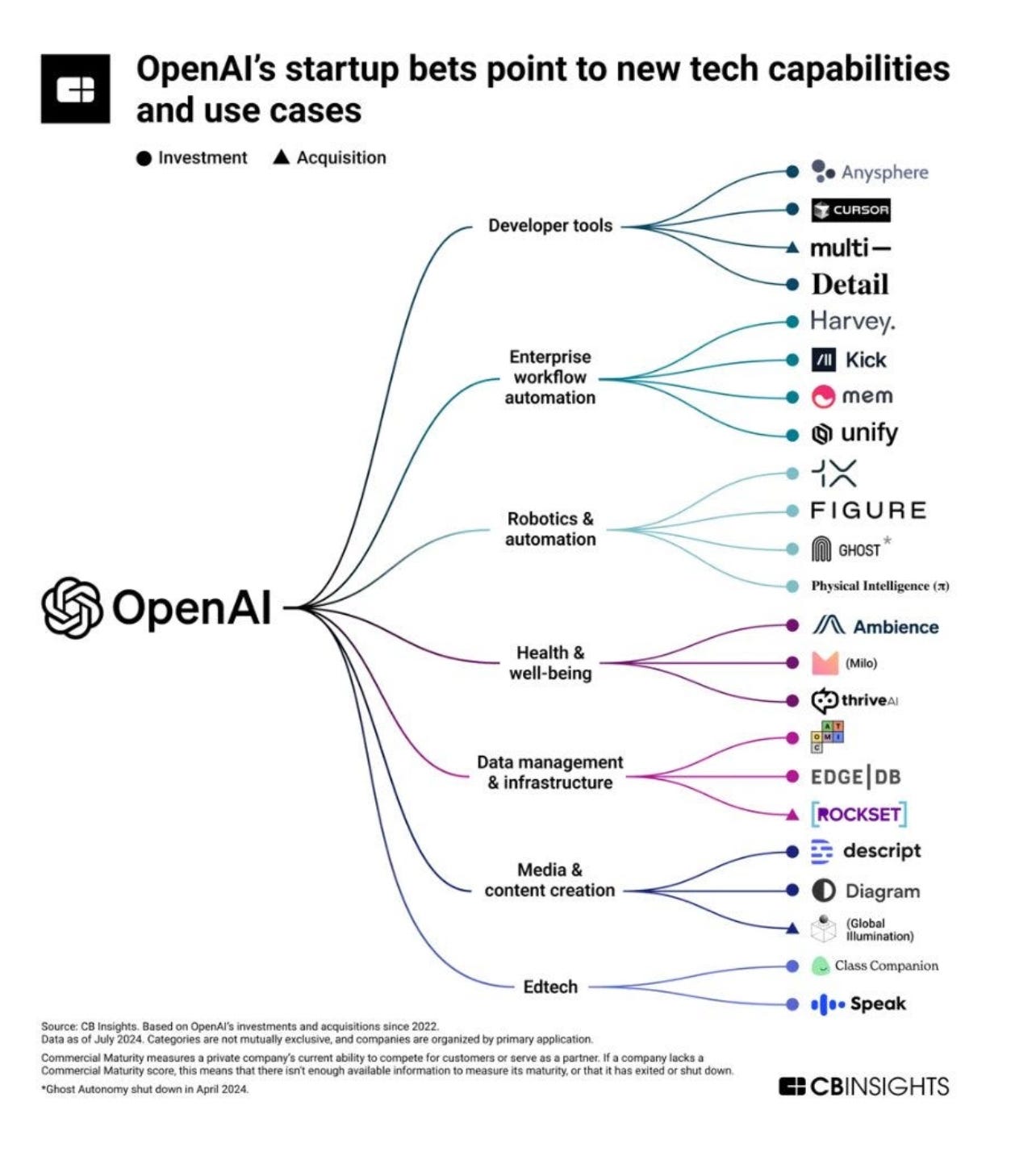

Investments and Strategic Partnerships

OpenAI actively supports the AI startup ecosystem through investments, primarily via the OpenAI Startup Fund established in 2021 (it manages manages approximately $175 million to invest in early-stage AI startups).

It has a broad set of partnerships (with each one being a strategic hedge against dependency, energy cost, supply constraints, regulatory headwinds etc.)

Hardware / chip suppliers - it has partnerships with NVidia, Samsung, Broadcom, Hitachi etc. to ensure supply of critical hardware (GPUs/accelerators/etc.), and reduce dependency, co-development of custom hardware

Data center / infrastructure and compute providers - to build and scale massive infrastructure (data centers, cloud capacity, power) to support training & inference at very large scale. This includes Stargate project (with Oracle, SoftBank, MGX), Google Cloud to diversify compute sources beyond Microsoft’s Azure, floating data center with Samsung Heavy Industries etc.

Content and licensing / data partnerships - for data content to feed models, improve quality, improve reliability, avoid legal exposure. Examples include Future, Tom’s Guide, PC Gamer, Vox Media, News Corp, Axel Springer, Financial Times, The Atlantic.

OpenAI’s ambition borders on empire-building, unprecedented and unsettling at the same time

OpenAI and AI Value Stack

In my article AI Value Stack, I have mapped out the key layers of the AI ecosystem that include model labs (foundational AI developers), hyperscalers (large cloud providers), app and wrapper companies (building on top of AI models), neo-clouds (AI-specialized clouds), and silicon/chip firms and economics for each layer in the ecosystem.

OpenAI seems to be pushing into multiple layers simultaneously

Infrastructure: compute, data centers, chip supply

Wrapper/app layer: Agents, assistants, APIs to let developers and enterprises build on top

Hardware devices: AI companions, wearables, etc.

Ecosystem control / moat building: securing parts of supply chain, partnerships, in-house design

What this diversification means is that OpenAI is no longer just a model provider. It is trying to become an infrastructure giant, hardware roadmap owner, systems integrator, consumer device maker, and enterprise solutions provider.

These expansions multiply both opportunity and risk.

While it could enable potential capture of value (higher margin, more control), it also increases exposure to risks (CapEx, supply chain, execution complexity).

OpenAI’s rivals like Google, Meta, Anthropic, Cohere, and others are also aggressively investing in foundational models and SaaS, raising competitive risks.

AI ecosystem is still evolving rapidly, and the market may reward multiple players or new approaches rather than a single winner.

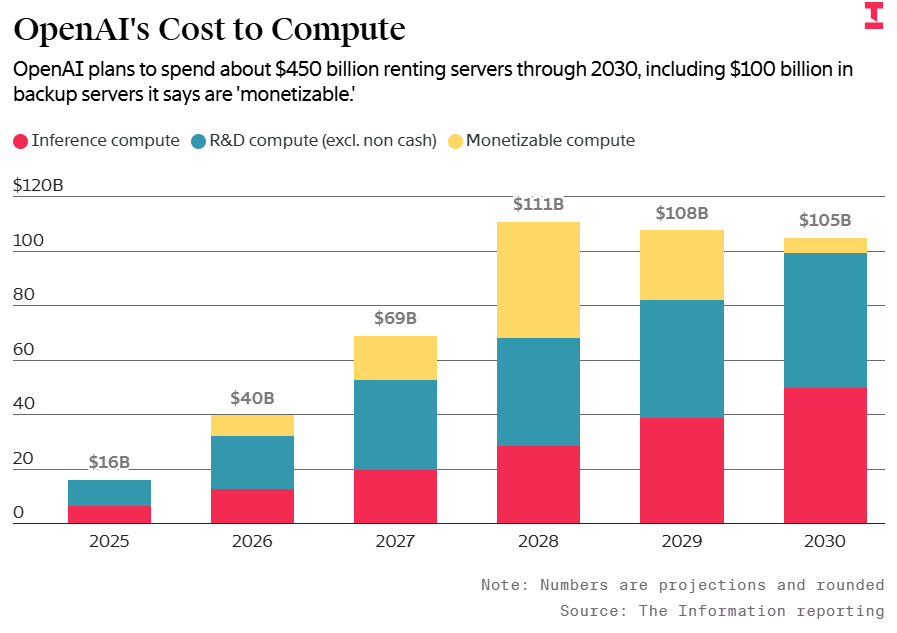

Compute Cost vs Revenue Risk

The Information’s report article on OpenAI’s First Half 2025 Results has an interesting data point. One particularly striking insight is the projected drop in monetizable compute growth between 2028 and 2029. In effect, after years of seemingly exponential scaling, the usable compute (i.e. compute that can be monetized, not just provisioned) is expected to plateau or even contract sharply. That means OpenAI would need to lean heavily on alternate revenue streams (such as ads, partnerships, licensing, or data services) just to keep pace with its fixed infrastructure commitments.

But that expectation strikes me as exceptionally aggressive (maybe even unrealistic in such short time frame), considering it has capital commitments e.g. $60 billion/year to Oracle.

What’s most striking isn’t that compute costs may decline, but that the compute capacity driving revenue appears to taper so sharply.

Given the timing, one can’t help but wonder whether these projections are structured to paint an aggressive path to profitability i.e. to justify current valuations ($500 billion) and investor expectations, rather than reflecting operational reality.

Empire of AI by Karen Hao

As journalist

notes in her book Empire of AI, OpenAI has transcended the boundaries of a typical tech company. It now behaves more like a sovereign entity, one that governs compute, capital, and information at planetary scale. The question for society isn’t whether OpenAI will succeed, but whether the empire it’s building aligns with the public good it once pledged to protect.Conclusion

No company in history has attempted to span as many domains (from model labs to cloud infrastructure, hardware, enterprise consulting, and even social media) all at once, at this scale. If it succeeds, this will be an unprecedented feat of innovation and scale, positioning OpenAI as the defining AI company of this generation.

Essentially OpenAI is aiming to “eat everyone’s lunch.”

However, this ambition comes with enormous risk. If it falters, the consequences could ripple across the AI ecosystem. With so much capital, infrastructure, and policy tied to OpenAI’s success, a failure here wouldn’t just hurt one company, it could stall the AI industry’s growth narrative and shake confidence across investors, suppliers, and enterprises.

OpenAI’s ambition to “do it all” reflects both its vision and its vulnerability.

A humorous touch - “Teja Main Hoon!” Moment

There’s a scene in the Bollywood cult classic Andaz Apna Apna where Paresh Rawal’s character Teja, impersonating his twin brother, declares “Teja main hoon, mark idhar hai!”, while plotting to start a murgiyon ka farm (chicken farm). It’s a comic moment where one man trying to be everyone and do everything at once.

OpenAI today feels a bit like that. The Teja of the tech world. It wants to be the model builder, the cloud provider, the chip designer, the enterprise consultant, the app store, and even the hardware maker.