Innovation Has Left the Lab: How AI Broke the Innovation Playbook

From academia to trillion-dollar firms and sovereign funds—how innovation is being rewritten

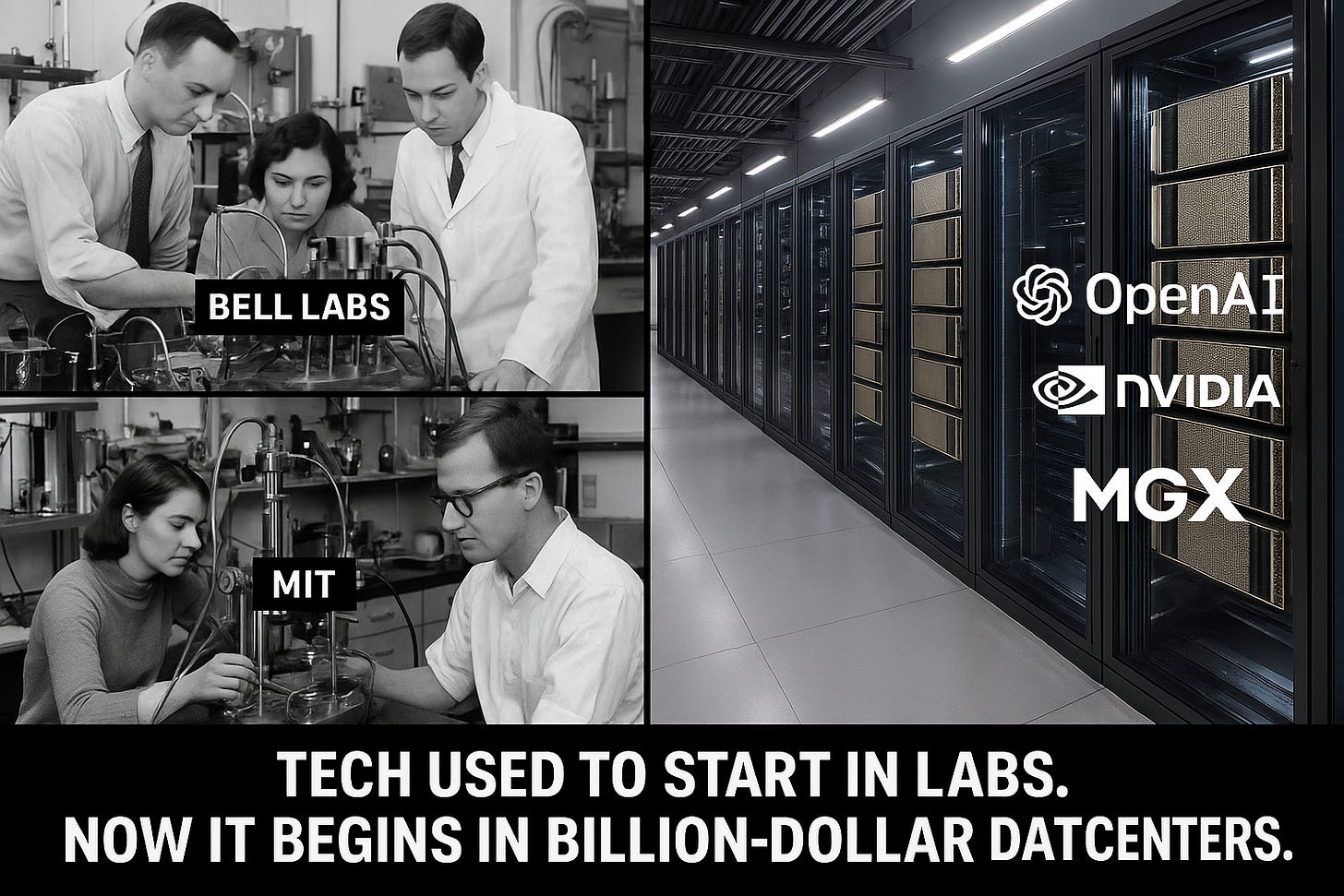

Most modern technologies, that are now ubiquitous, find its roots in a research lab in some university and funded by a combination of federal research grants and industry collaboration to commercialize it. Once the research and technology passed a certain phase of maturity, the industry took over and built products around it for large scale adoption.

For decades, this model worked well since the funding required was modest and university research could sustain development of new ideas. Plus we were in the early phases of modern technology.

Role of university research in modern technology

Some prominent examples include:

Operating Systems

Several university-led research initiatives have played foundational roles in the development of modern operating systems. The most influential projects have not only advanced OS design, but also introduced concepts and technologies that underpin today's computing environments.

MULTICS (Multiplexed Information and Computing Service): in 1960s, a consortium involving Massachusetts Institute of Technology (MIT), General Electric (GE), and Bell Labs collaborated to implement MULTICS. The project aimed to create a "computing utility" that would provide shared computing resources, much like a modern cloud service. Although MULTICS was too ambitious and did not succeed, it generated many ideas in operating system design and directly inspired the development of UNIX

UNIX: UNIX was developed at Bell Labs by Ken Thompson and Dennis Ritchie in 1969, but its rapid spread and evolution were driven by academic institutions. AT&T licensed UNIX to universities for a modest fee, leading to widespread adoption in the academic community. This fostered research in operating systems. The University of California, Berkeley, played a particularly prominent role through the development of the Berkeley Software Distribution (BSD), which introduced networking capabilities (such as the TCP/IP stack) and other enhancements that became foundation for modern OS networking and internet infrastructure.

Linux, which drew on UNIX principles was widely adopted in academia and was a key enabler of open source and cloud computing revolutions

Silicon Design

Much like early operating systems and networking, foundational silicon innovations began in academia before scaling industry-wide.

RISC (Reduced Instruction Set Computing): Developed at UC Berkeley (RISC) and Stanford (MIPS) in the 1980s, RISC architectures radically simplified CPU design. These academic breakthroughs led directly to ARM, powering billions of mobile devices. RISC-V, which emerged from UC Berkeley in the 2010s and is now a global open standard.

SPARC: Stanford’s Project Sun led to the SPARC architecture in the mid-1980s, which underpinned Sun Microsystems’ servers. SPARC's influence is evident in modern CPU pipeline techniques.

CSAIL at MIT: MIT’s work on VLSI and chip design tools influenced modern multiprocessor and AI chip development.

Internet

Several university research initiatives, often in collaboration with government agencies, were pivotal in the creation and evolution of the Internet.

ARPANET: funded by the U.S. Department of Defense’s Advanced Research Projects Agency (ARPA), was the first operational packet-switching network and the direct precursor to the Internet. In 1969, four universities (UCLA, Stanford, UCSB and University of Utah) became the first interconnected sites, exchanging data and demonstrating the viability of networked communication between geographically distant computers

TCP/IP: University researchers, in partnership with ARPA, developed foundational technologies such as packet switching and the TCP/IP protocol suite, which became the universal language of the Internet in 1983.

NSFNET: In the 1980s, the National Science Foundation (NSF) funded the creation of NSFNET, a backbone network connecting supercomputing centers at universities across the United States.

Internet2 Consortium: In the mid-1990s, over 200 universities formed the Internet2 consortium to develop and deploy advanced networking technologies, supporting high-speed research and educational applications beyond the commercial Internet’s capabilities

Similarly, many more technologies from broadband, mobile, cloud computing, quantum computing, biotechnology to aerospace can be traced back to university research in collaboration with the industry.

This has been true in the case of AI too. However, AI can be looked at in two phases. The first phase from early days until the early 2000s, and second phase is recent phase of transformers and LLMs.

Early AI (1956 – early 2000s)

Dartmouth and AI

The 1956 Dartmouth workshop, organized by John McCarthy (Dartmouth), Marvin Minsky (MIT), Nathaniel Rochester (IBM), and Claude Shannon (Bell Labs), is widely recognized as the founding event of AI as a formal academic discipline.

This workshop introduced the term "artificial intelligence" and brought together pioneering researchers who would drive the field's early breakthroughs.

University led initiatives

MIT played a central role in the contributing to symbolic AI and cognitive science. The research has spanned computer science, psychology, and linguistics, and advances in modeling cognition and symbolic reasoning.

Stanford has rich contributions to foundational AI languages and reasoning systems. CMU has had contributions to symbolic AI, cognitive architectures, and machine learning.

Logic Theorist (CMU), ELIZA (MIT), and SHRDLU (Stanford/MIT) were early university AI projects.

These university-led initiatives established the core ideas, communities, and technical foundations that continue to shape AI today.

Modern AI (early 2000s – now)

The research during this phase has been very unlike how it happened for other technologies, including AI during its early phase.

Shift from academic to corporate research

Unlike earlier cycles where breakthroughs emerged from university labs, today's frontier AI research is dominated by well-funded corporate entities. Google’s breakthrough research “Attention Is All You Need” (2017) enabled the large language model (LLM) revolution by making training massively parallel and scalable.

The modern AI research has required large compute resources (and data), that academic institutions lack. A 2020 study finds that "only certain firms and elite universities have advantages in modern AI research" due to the what’s called “compute divide”.

A 2021 study highlights that the AI field has seen a “growing net flow of researchers from academia to industry,” especially among elite-ranked universities and major firms like Google, Microsoft, and Facebook. Research from 2024 shows that while academia still produces most AI papers, industry teams generate the majority of high-impact, state-of-the-art work, often outperforming on citations and breakthrough impact.

Capital investment needed exceeds academic funding capacity

Training large models requires extensive investment in GPUs, high-performance data centers, and storage - resources universities simply can’t match on this scale. Global private investment in AI is projected to reach over $200 billion annually by 2025, representing an unprecedented surge of capital into a single technology sector compared to past cycles.

While the past technology cycles included a combination of federal grants and VC capital, this cycle is shifting towards a combination of massive corporate investments along with sovereign investments, in addition to the VC capital.

The entry of sovereign capital is due to the significance of AI as a technology that could reshape political, economic, military and security apparatus of countries, and hence countries want to be in control of their own destinies in the future. So they see AI as strategic national infrastructure and not just commercial opportunity. Some examples of sovereign investments include

The UAE's MGX, linked to Mubadala and G42, is targeting up to $100 billion in AI infrastructure investment, including backing of OpenAI

Gulf sovereign funds (Saudi PIF, UAE’s Mubadala/ADIA, Qatar and Kuwait funds) have increased AI investment fivefold recently, reflecting national strategies to diversify from oil

ADNOC (UAE’s state oil firm) calls AI a “once-in-a-generation investment opportunity,” pledging $440 billion in U.S. energy & AI projects

Implications

This trend has huge implications for both research community and the larger ecosystem in general

De-democratization of research: This may limit or de-democratize research going forward. Academic institutions are increasingly being sidelined, which can have broader consequences for future of research itself. Universities not only drive research but also train the workforce and founders who commercialize these technologies.

Data and Compute centralization: The investment required and stakes are now so high that this could risk greater data and compute centralization. This could limit access for smaller organizations and academic researchers, potentially stifling open innovation and reinforcing the “compute divide”.

Geopolitical stakes: Since AI is seen as a national infrastructure, this could raise geopolitical stakes in AI access and governance. We have already seen some of it w.r.t. export controls on GPUs. This heightens regulatory, security, and geopolitical risks, as control over critical AI infrastructure and data becomes a lever of national and corporate power.

Conclusion

Historically, fundamental innovations (from OS design and the Internet to silicon architecture) started from universities, backed by federal grants, and matured through industry collaboration. In each cycle, academia planted the seeds, and commercialization followed.

Today’s AI paradigm diverges sharply. Frontier innovation now require monumental capital (compute, data, data centers) that only private firms and sovereign entities can deploy. Academic institutions are pushed to the margins, while national interests concentrate control and resources.

This inflection raises critical questions.

Will this shift stifle AI research innovation?

Can academic-driven open innovation survive within an increasingly centralized AI ecosystem?

As governments and private entities rush for dominance, preserving equitable access and transparency seems more vital than ever. Universities might adapt through public-private partnerships and new funding models to remain relevant in frontier research.