100,000 GPUs Later: The Grok 3 Gambit

The AI Juggernaut's Latest Leap

Not too long ago, DeepSeek took the AI world by storm with a model that rivaled the best performing ones with reduced training costs, innovative techniques of optimized distillation and mixture-of-experts architecture to achieve high efficiency and reasoning capabilities.

Now Grok3 is making the buzz pushing boundaries by utilizing 100,000 Nvidia H100 GPUs (expanded to 200,000 GPUs) that provides 200 million GPU-hours of training, outperforming GPT-4o, DeepSeek V3 in math, science and coding benchmarks - all within 12-18 months of xAI’s founding.

It seems like a Fast and Furious movie. A month seems to be an eternity in the world of GenAI!

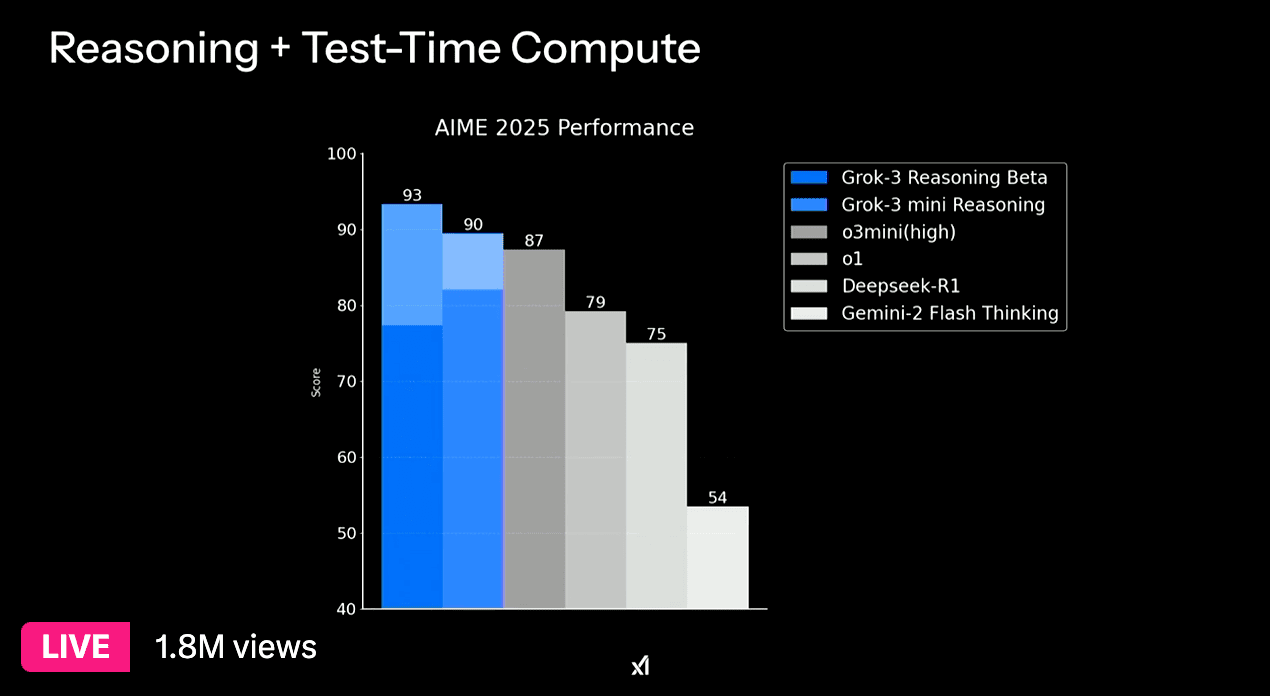

xAI’s Colossus cluster provides for massive parallelism, which helps to significantly reduce time-to-train through better performance when scaling to thousands of GPUs. In addition, the cluster now serves as Test-Time Compute for Deep Search and continuous self-evaluation and self-correction mechanisms.

Here’s a cursory costing estimate of Grok3.

Hardware Costs:

Initial deployment: 100,000 Nvidia H100 GPUs

Expansion: Additional 100,000 GPUs (mix of H100 and H200)

Estimated cost per H100: $25,000

200,000 GPUs x $25,000 = $5B

Energy Costs:

Training duration: 200 million GPU-hours

Power consumption: 250 MW for the cluster

Energy consumed = 250 MW x (200,000,000 hours / 100,000 GPUs) = 500,000 MWh

Energy cost = 500,000 MWh x $70/MWh = $35M

Additional costs: facility, cooling infrastructure, operational expenses

Evaluating the ROI

Elon Musk has the means to fund this to catch up and be counted among the frontrunners in the GenAI arms race. While being counted among the frontrunners is enough of a ROI from xAI’s perspective, I think it is still interesting and worth evaluating.

To evaluate whether the $5B investment in training Grok 3 (plus energy costs) offers a a good ROI, we need to evaluate technical and business impact.

Chain of Thought Reasoning

Ability to reason through complex problems step-by-step

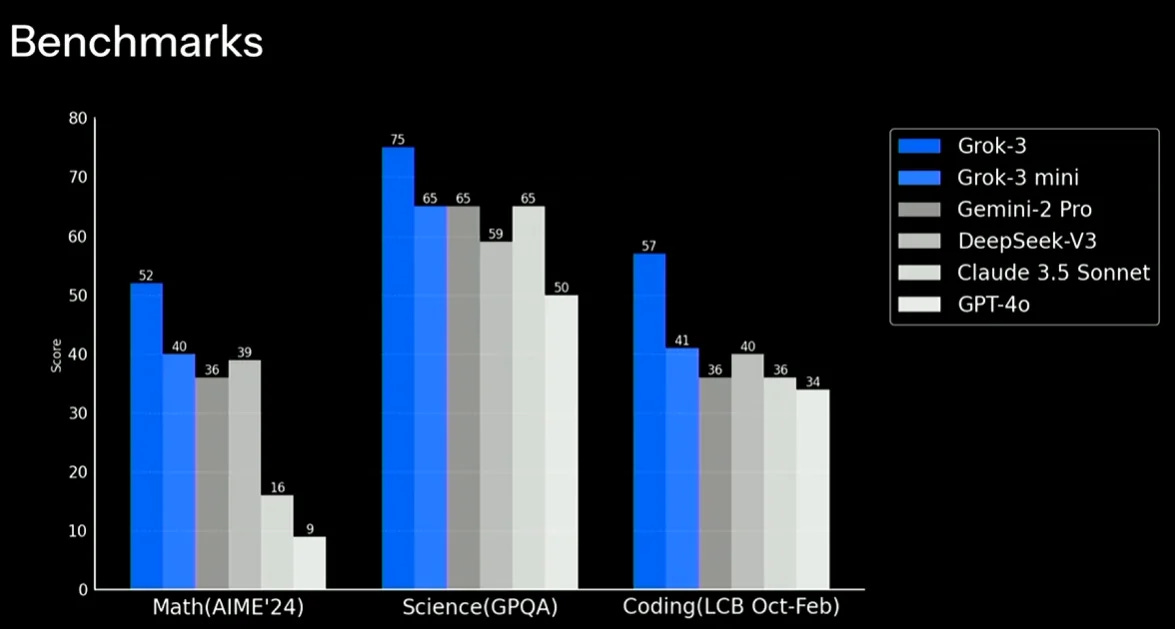

Grok 3 claims to beat GPT-4o on benchmarks such as AIME (math questions) and GPQA (PhD-level physics, biology, and chemistry problems). While independent evaluations are in the works, the claims are impressive.

Accuracy

Task-specific accuracy, such as question-answering benchmarks or domain-specific datasets.

xAI claims that Grok 3 outperforms OpenAI's GPT-4o, Google's Gemini, DeepSeek's V3 model, and Anthropic's Claude across various benchmarks. Claims are impressive, pending independent evaluations.

Scaling efficiency

Scaling with increased test-time compute

At the outset, it appears Grok3 showed minimal improvements despite using 10x more compute power compared to its predecessor Grok2, and may not justify the massive compute and energy costs. DeepSeek has shown competitive performance through algorithmic innovation vs brute-force scaling, which I suspect most of the industry will be considering. This is where I reckon more work will be done in the near future.

Latency and Throughput

Number of queries processed per second

Grok 3 appears to demonstrate superior latency and throughput compared to other leading AI models. It appears to respond (22%) faster than GPT-4o and code generation seems to be faster too. This is not surprising and can be attributed to the Colossus infrastructure. Is 22% good enough? More testing will reveal more granular benchmarks.

Hallucination Rate

How often does it generate incorrect or nonsensical outputs

This is another area that will be explored further in the coming weeks. Here is a initial review by Gary Marcus that has some tests on hallucinations. Various opinions seem to suggest it could be in the same ballpark as GPT-4o, Gemini 2 models. This issue continues to be a significant concern, particularly for critical applications in healthcare and law.

Ultimately, as LLMs fundamentally operate on probabilistic pattern recognition rather than true understanding, simply increasing computational power may not fully resolve hallucinations; while techniques like Chain of Thought reasoning can help mitigate the problem, completely eliminating hallucinations remains an elusive goal in the current AI landscape.

Security

There are no notable security improvements mentioned specifically for Grok 3 compared to other models. There have been some reports of security vulnerability. This highlights the ongoing challenges in AI security and the need for robust server-side access controls in AI implementations.

Additional considerations

Some additional metrics would be interesting to evaluate such as energy efficiency (energy consumption per inference) compared to other models.

Conclusion

While the claims and initial results seem to show incremental improvements, the gains don’t seem to be proportional to the infrastructure Grok3 has been trained on relative to other models.

ROI would also depend on adoption rates and downstream applications / innovation Grok 3 can potentially enable.

Ultimately, the value of Grok 3 is subjective, varying based on individual perspectives—whether you're a user, an investor, or affiliated with a competing AI firm. Regardless, Grok 3's emergence signifies the relentless advancement of AI technology - the GenAI juggernaut moves forward.

It would be great to see the response to the ROI question from Grok3’s Chain of Thought !